Importing data from a data dump

This document walks through two different options for importing data dumps into Faros.

The generic Faros Sheets source can be leveraged to read from an xlsx file. The input file can be a local spreadsheet file, a Google Sheet or a spreadsheet file in an S3 bucket.

We will use a local spreadsheet file for the examples below.

For writing into the Faros graph, we can use the Faros Destination in two ways:

- Using a JSONata expression

- Using a custom converter.

We will also use the Airbyte Local CLI. This tool runs the source (Sheets) and destination (Faros Destination). It pipes the source output records (basically rows from the sheet) into the destination code so that it converts these rows into objects in our schema and then writes them into the graph.

As an example, let's say we have a dump of data representing tasks in a task management system.

| ID | Name | Description | CreatedAt | URL |

|---|---|---|---|---|

| t1 | Design Homepage Mockup | Create a high-fidelity mockup for the new homepage design, including header, footer, and main content sections... | 2023-11-01 | http://taskmanagement.example.com/tasks/1 |

| t2 | Implement Authentication System | Develop a secure authentication system that allows users to log in or register. Must support email/password a... | 2023-11-02 | http://taskmanagement.example.com/tasks/2 |

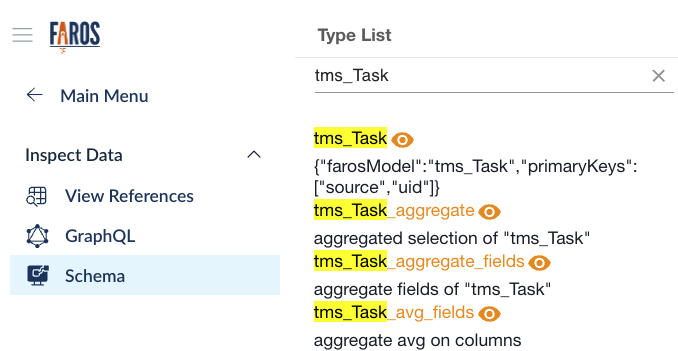

Before diving into the options for writing into the Faros graph, we need to get familiar with the Task (tms_Task) model in the Faros schema.

We can search for tms_Task

And then click on tms_Task to get to the detailed view of the model.

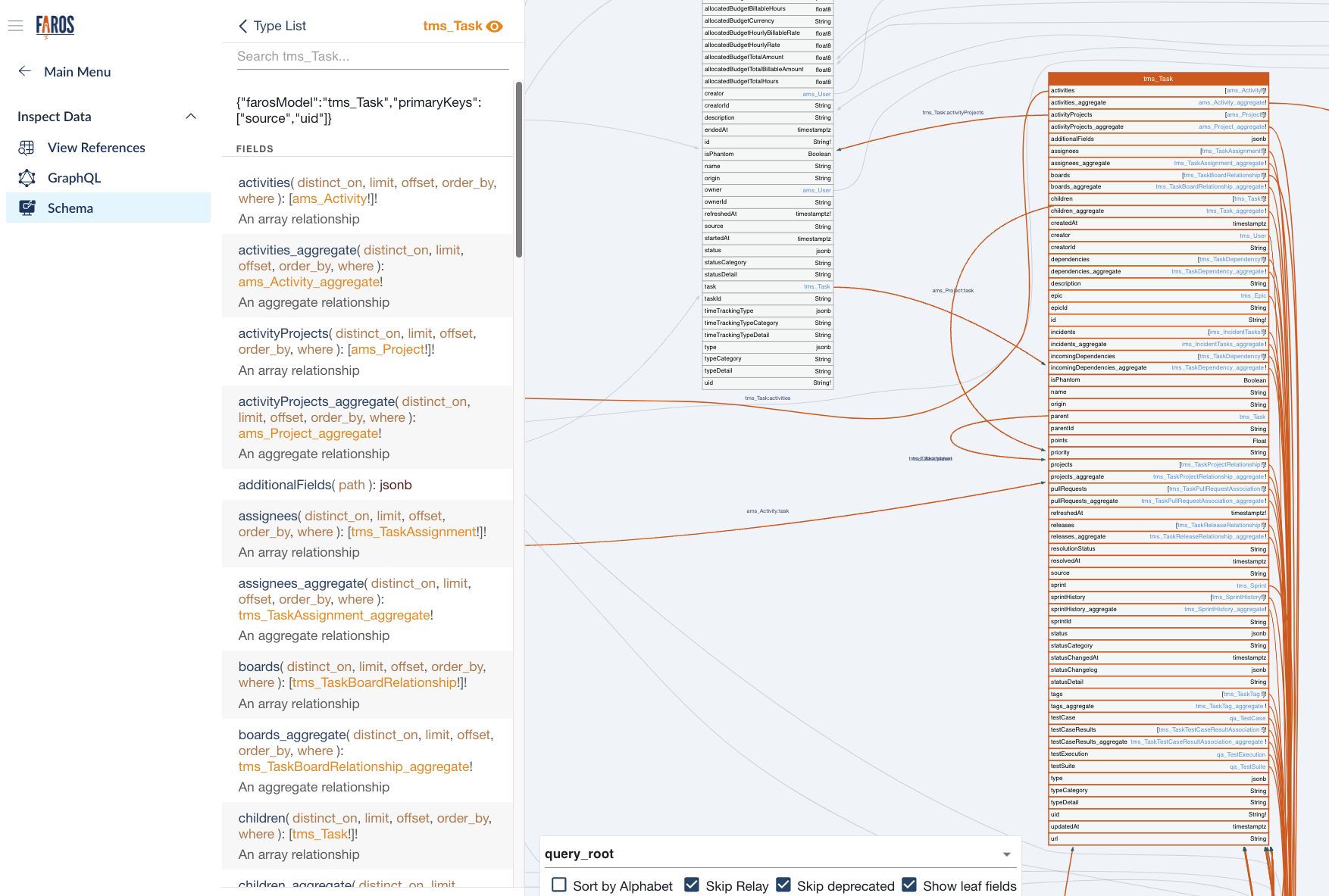

From primaryKeys we now know that we are required to write a source and a uid(unique id) fields. The source field corresponds to the source system where the data is coming from. Since we'll be importing data using the Sheets source, we'll use Sheets for this field. Since we have an ID column in our data dump that uniquely identifies tasks, we can use this as the uid field in the Faros tms_Task model.

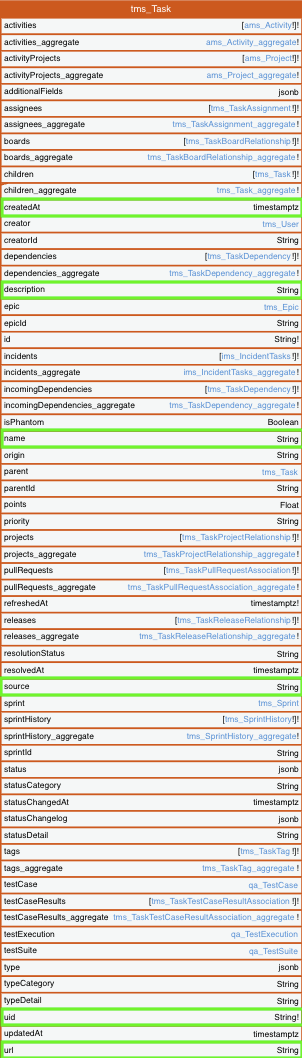

Upon further inspection, we find good candidate fields for the data we have in the dump:

We now have a good understanding of how to map the data we have in the dump to the tms_Task model.

Option 1: JSONata expression

One can craft a JSONata expression to transform from the Sheets source output records into tms_Task (this is the name of the model in the Faros schema):

export JSONATA_EXPR="""(

data.row.(

{

'tms_Task': {

'uid': ID,

'name': Name,

'description': Description,

'createdAt': CreatedAt,

'url': URL,

'source': 'Sheets'

}

}

)

)"""A note on timestamptz fields

In this example, the

createdAtfield type istimestamptz. The JSONata converter internally takes care of transforming the values you provide in fields of this type into the right format.The values can be:

- An integer value representing the timestamp (milliseconds since epoch). E.g.,

1700067799308- The timestamp as a string conforming to the JavaScript format. E.g.,

2023-11-15T17:02:01.611Z

Then run the Airbyte Local CLI command:

bash <(curl -s https://raw.githubusercontent.com/faros-ai/airbyte-local-cli/main/airbyte-local.sh) \

--src 'farosai/airbyte-sheets-source' \

--src.spreadsheet_source.sheet_source "LocalSpreadsheet" \

--src.spreadsheet_source.file "/tasks.xlsx" \

--src-docker-options '-v /path/to/tasks.xlsx:/tasks.xlsx' \

--dst 'farosai/airbyte-faros-destination' \

--dst.edition_configs.api_key $FAROS_API_KEY \

--dst.jsonata_mode 'OVERRIDE' \

--dst.jsonata_expression $JSONATA_EXPR \

--dst.jsonata_destination_models '["tms_Task"]'The command above basically does 3 things:

- Reads from the provided tasks.xlsx file, emitting one record per row. An output record looks like this:

{

"record": {

"stream": "sheets",

"emitted_at": 1699470995245,

"data": {

"sheetName": "Sheet1",

"row": {

"ID": "t1",

"Name": "Design Homepage Mockup",

"Description": "Create a high-fidelity mockup for the new homepage design, including header, footer, and main content sections. Ensure brand consistency.",

"CreatedAt": "2023-11-01T00:00:00.000Z",

"URL": "http://taskmanagement.example.com/tasks/1"

},

"sheetId": "tasks",

"id": "tasks_sheet1_0"

}

},

"type": "RECORD"

}- Transforms each output record into a tms_Task, using the JSONata expression.

- Writes the resulting tms_Task records to the Faros graph.

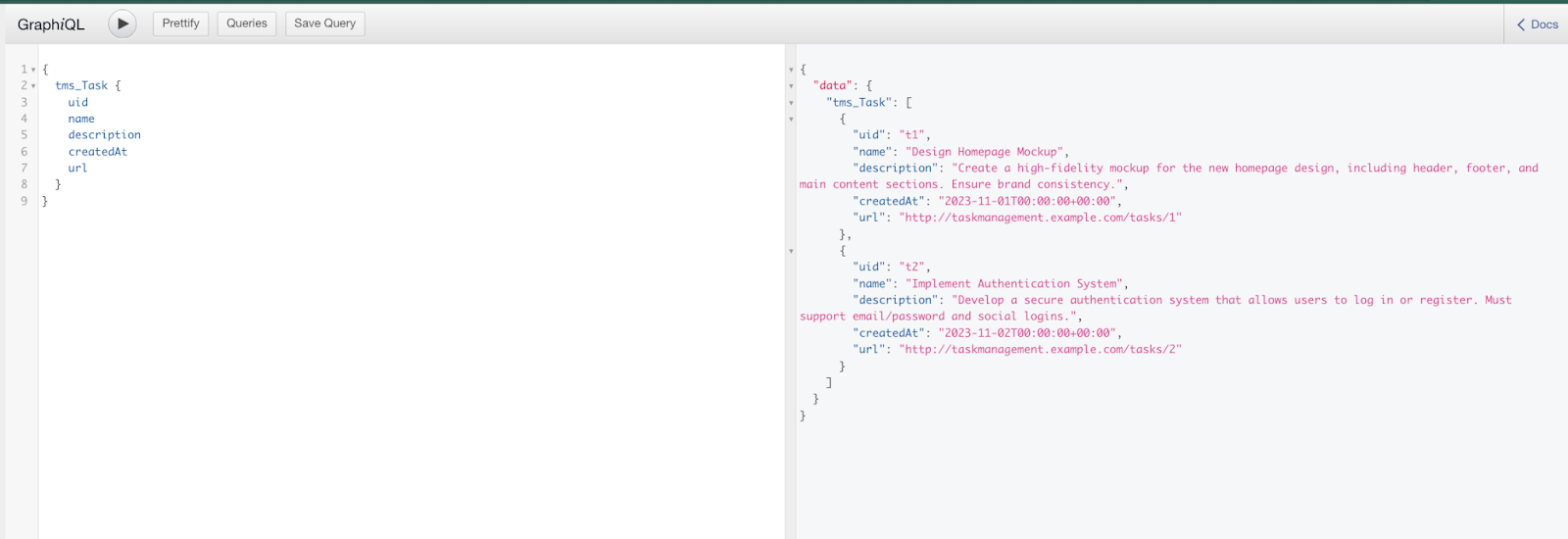

Here's a query showing the records written to the Faros graph:

Option 2: Custom destination converter

While the JSONata language is very rich and flexible, and has the advantage that it is passed as a configuration and so there's no compile/build/deploy step involved in the transformation piece of the process; there's also an option to write Typescript code to achieve the same result.

There's documentation on how to implement this option here, and an example repository where we implement the custom converter.

After building the custom destination docker image as example-destination, we can run the Airbyte Local CLI command:

# Example using a spreadsheet in the local filesystem

bash <(curl -s https://raw.githubusercontent.com/faros-ai/airbyte-local-cli/main/airbyte-local.sh) \

--src 'farosai/airbyte-sheets-source' \

--src.spreadsheet_source.sheet_source "LocalSpreadsheet" \

--src.spreadsheet_source.file "/tasks.xlsx" \

--src.stream_name "tasks" \

--src-docker-options '-v /path/to/tasks.xlsx:/tasks.xlsx' \

--dst 'example-destination' \

--dst.edition_configs.api_key $FAROS_API_KEY \

--dst-stream-prefix 'mysrc__sheets__'# Example using a spreadsheet in an S3 bucket

bash <(curl -s https://raw.githubusercontent.com/faros-ai/airbyte-local-cli/main/airbyte-local.sh) \

--src 'farosai/airbyte-sheets-source' \

--src.spreadsheet_source.sheet_source "S3Spreadsheet" \

--src.spreadsheet_source.file "s3://my-bucket/tasks.xlsx" \

--src.spreadsheet_source.aws_access_key_id "${AWS_ACCESS_KEY_ID}" \

--src.spreadsheet_source.aws_region "us-east-1" \

--src.spreadsheet_source.aws_secret_access_key "${AWS_SECRET_ACCESS_KEY}" \

--src.spreadsheet_source.aws_session_token "${AWS_SESSION_TOKEN}" \

--src.stream_name "tasks" \

--dst 'example-destination' \

--dst.edition_configs.api_key $FAROS_API_KEY \

--dst-stream-prefix 'mysrc__sheets__'This writes exactly the same records as the command that uses the JSONata expression.

Updated 5 months ago