Exporting data to Databricks

This metrics export connector syncs data to Delta Lake on Databricks Lakehouse. Each report table is written to its own delta-table.

This exporter requires a JDBC driver to connect to the Databricks cluster. By using the driver and the connector, you must agree to the JDBC ODBC driver license. This means that you can only use this connector to connect third party applications to Apache Spark SQL within a Databricks offering using the ODBC and/or JDBC protocols.

Getting started

Databricks AWS Setup

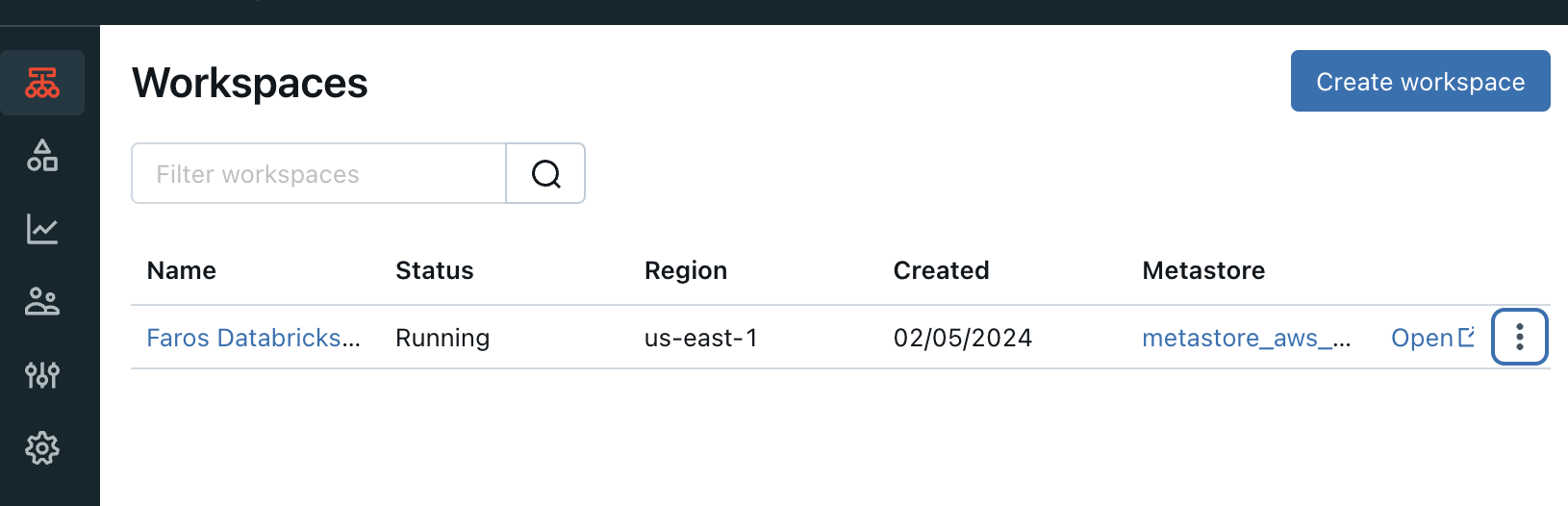

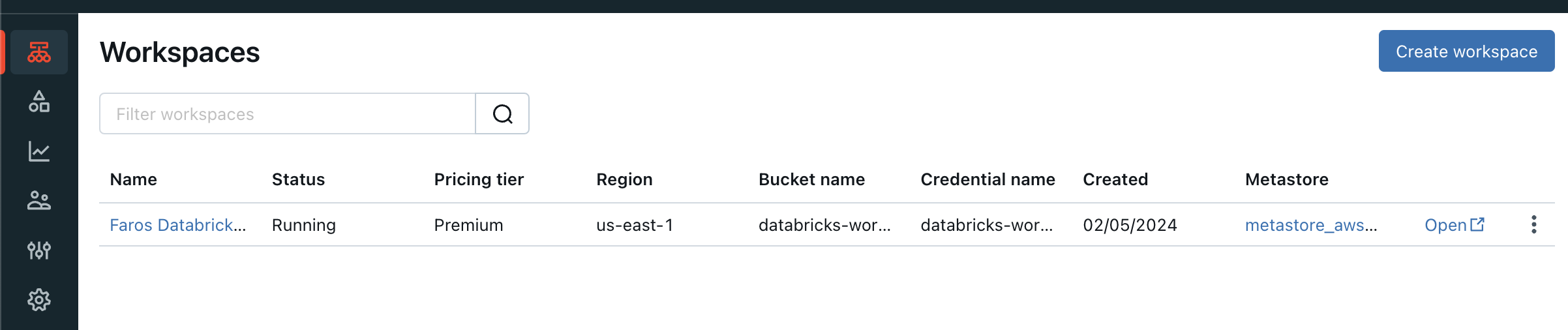

Create a Databricks Workspace

Click Create workspace in your Databricks console and follow the instructions to create a new workspace

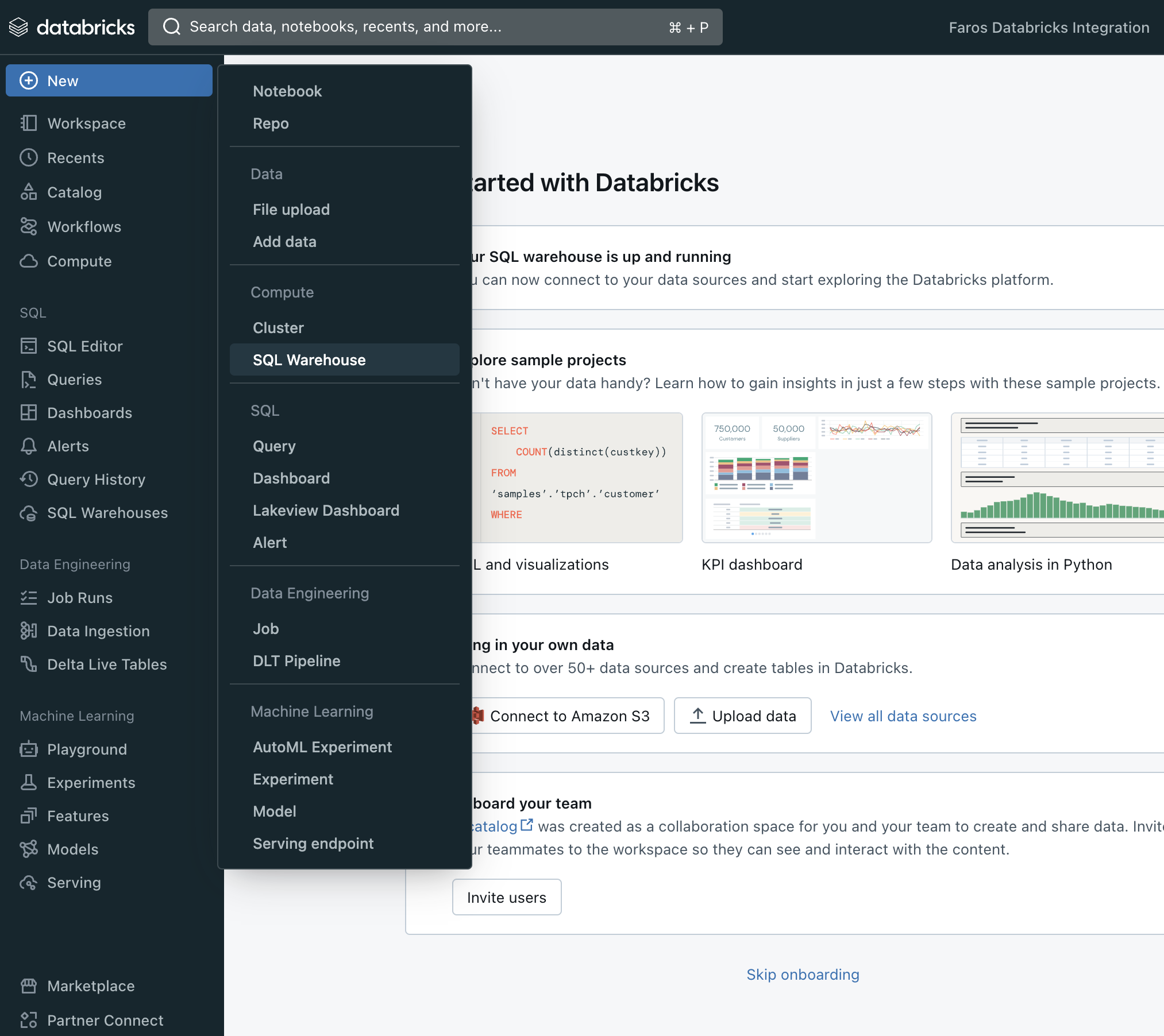

Create Databricks SQL Warehouse

Open the workspace tab and click on created workspace console

Create a new SQL warehouse

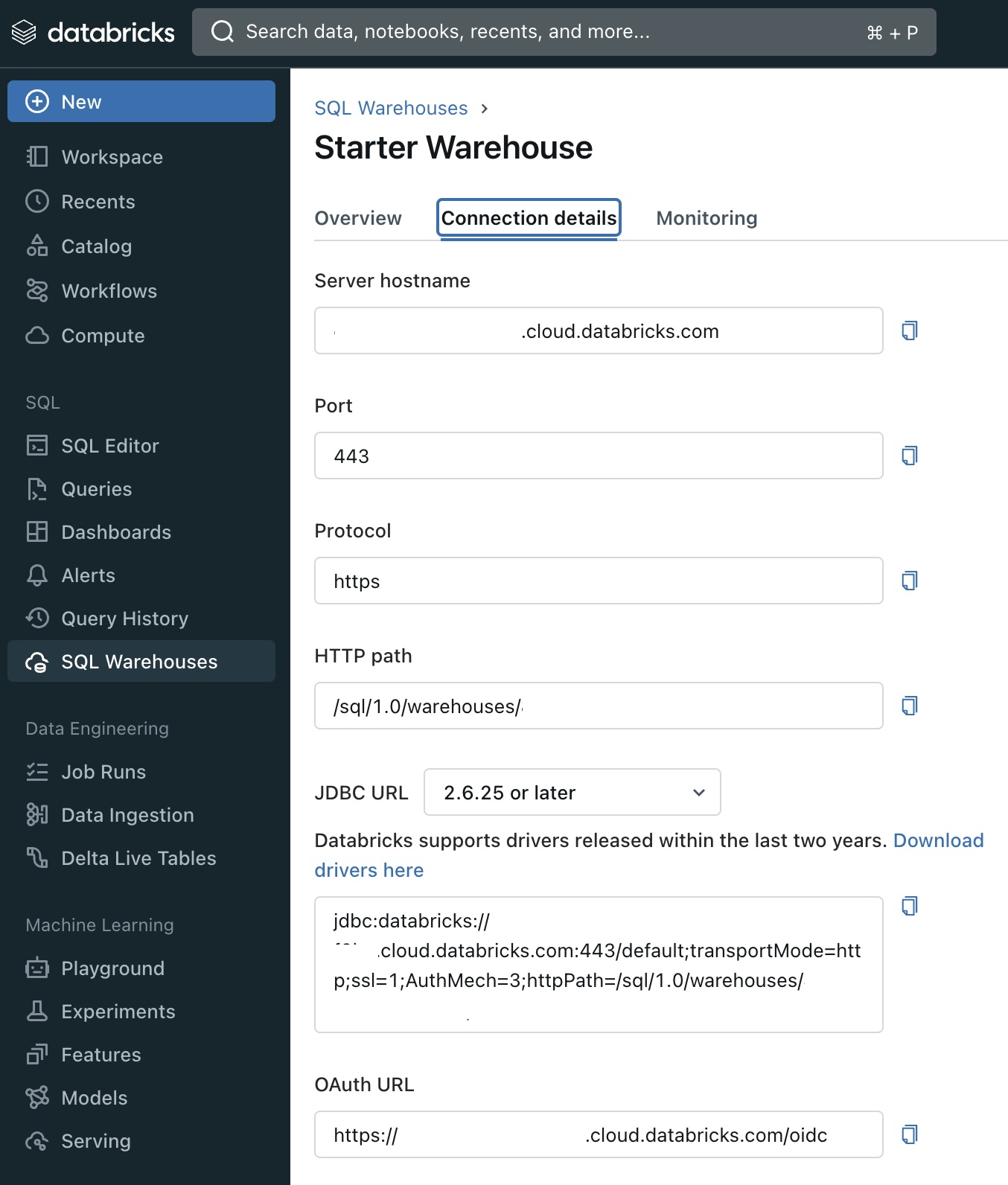

Gather Databricks SQL Warehouse connection details

Navigate to SQL Warehouses-> Click on your Warehouse -> Switch to the Connection detailstab.

Note the Server hostname, Port and HTTP path values.

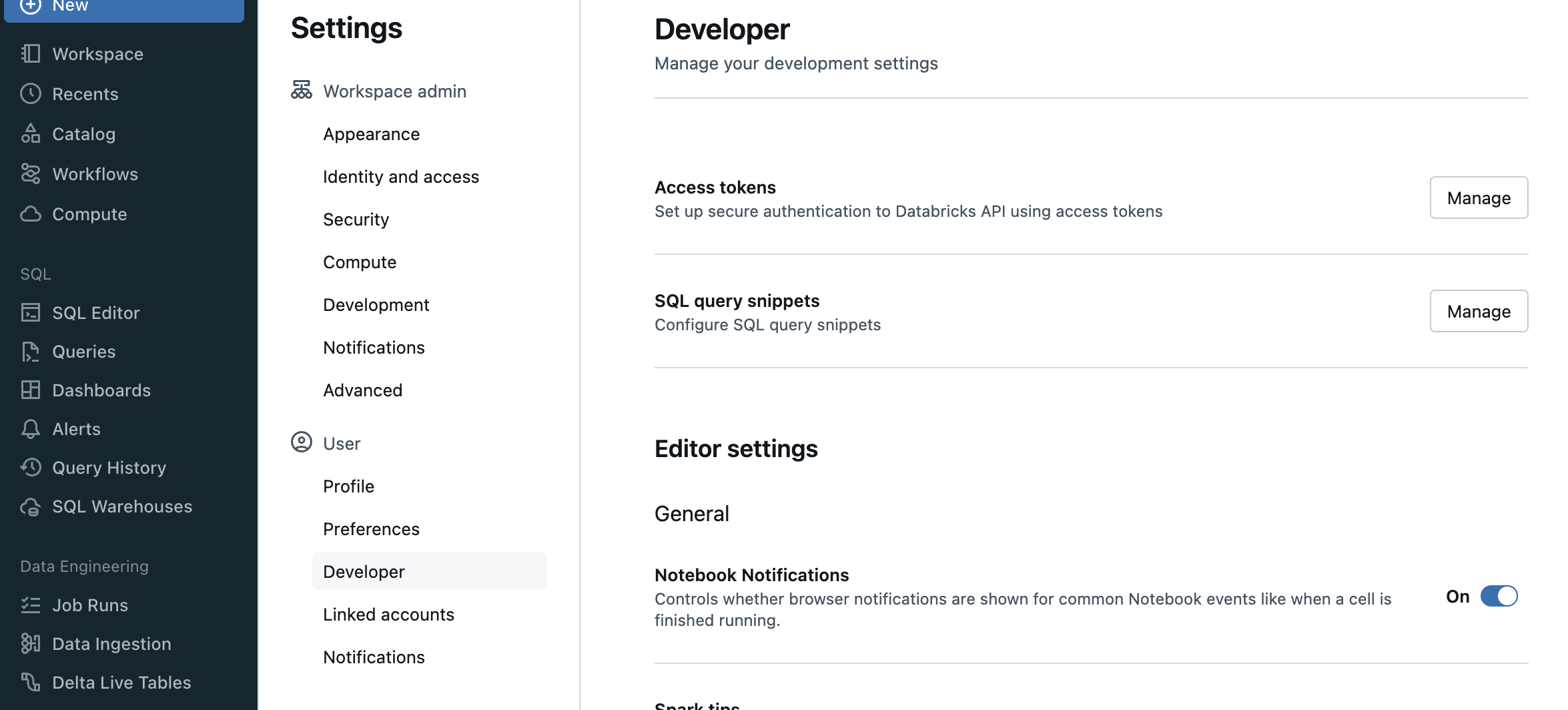

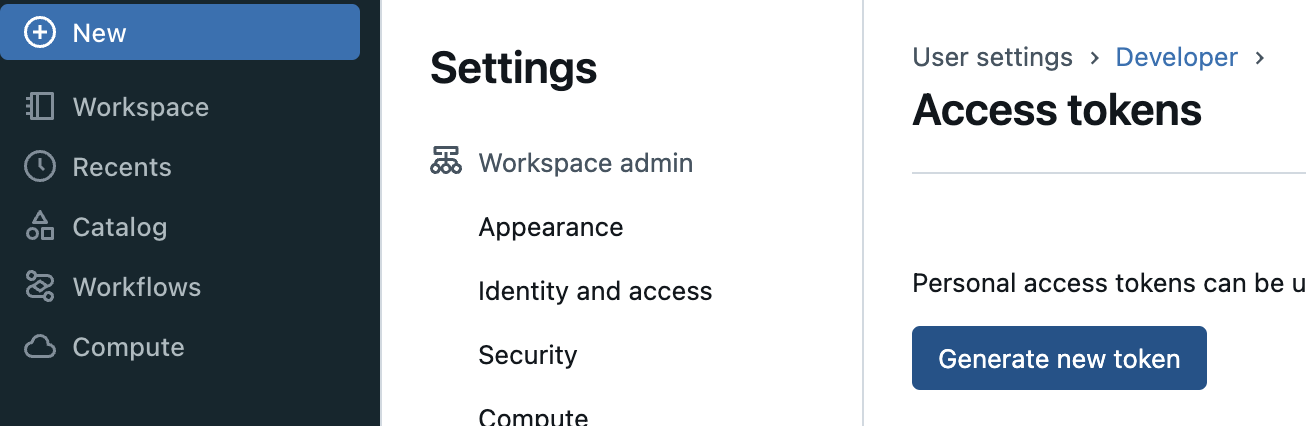

Create Databricks Token

Open User Settings, go to Access tokens

Click on Generate new token, fill in the optional details and click Generate

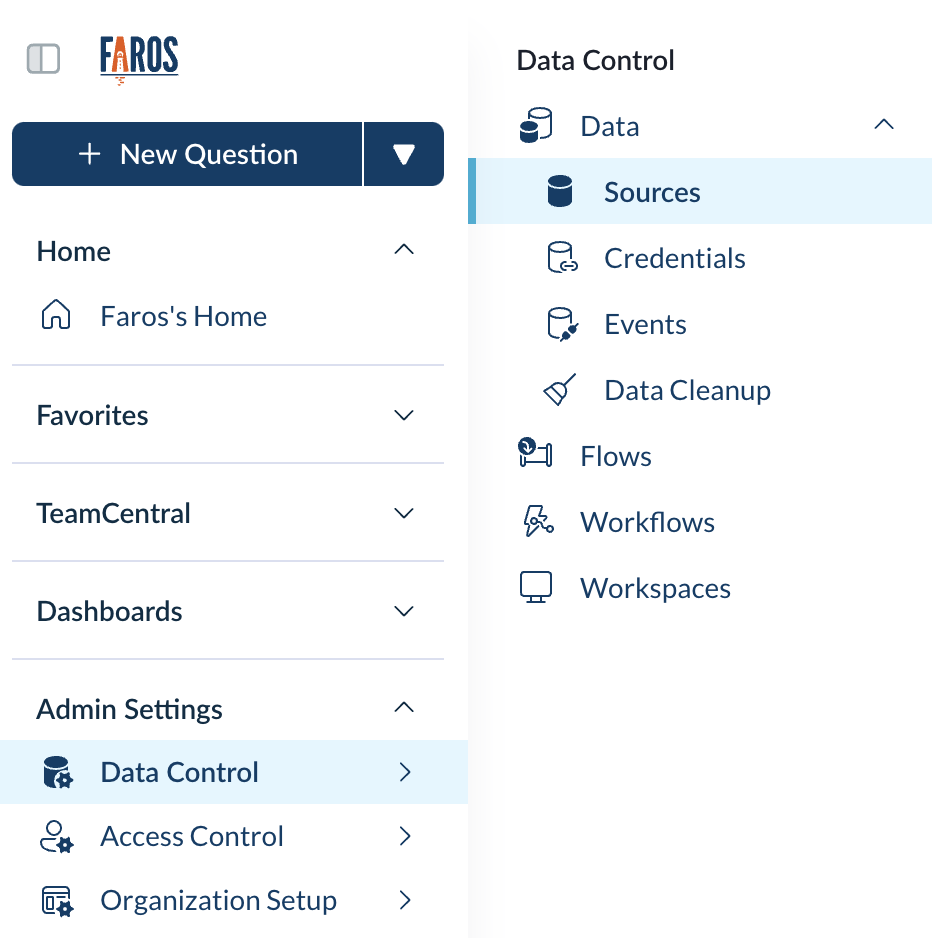

Faros Setup

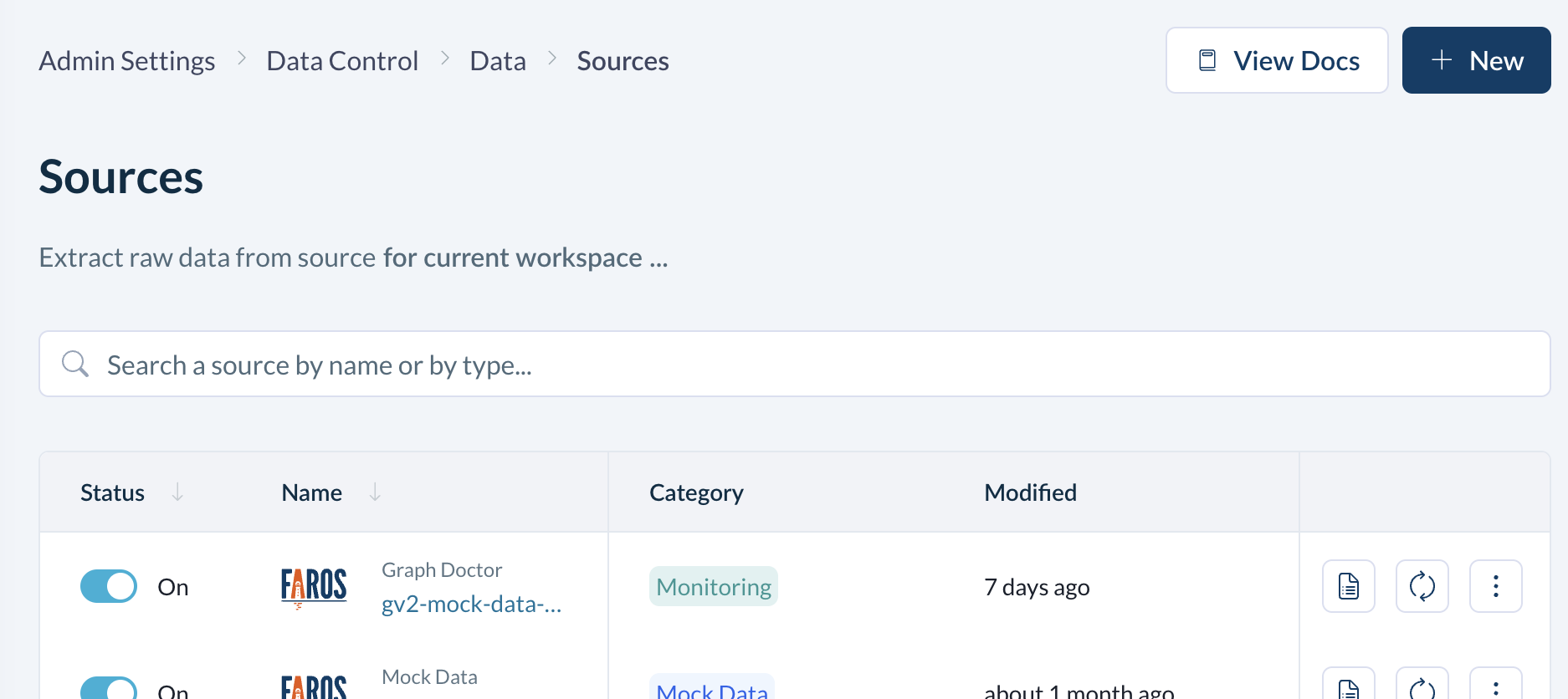

Navigate to the sources page

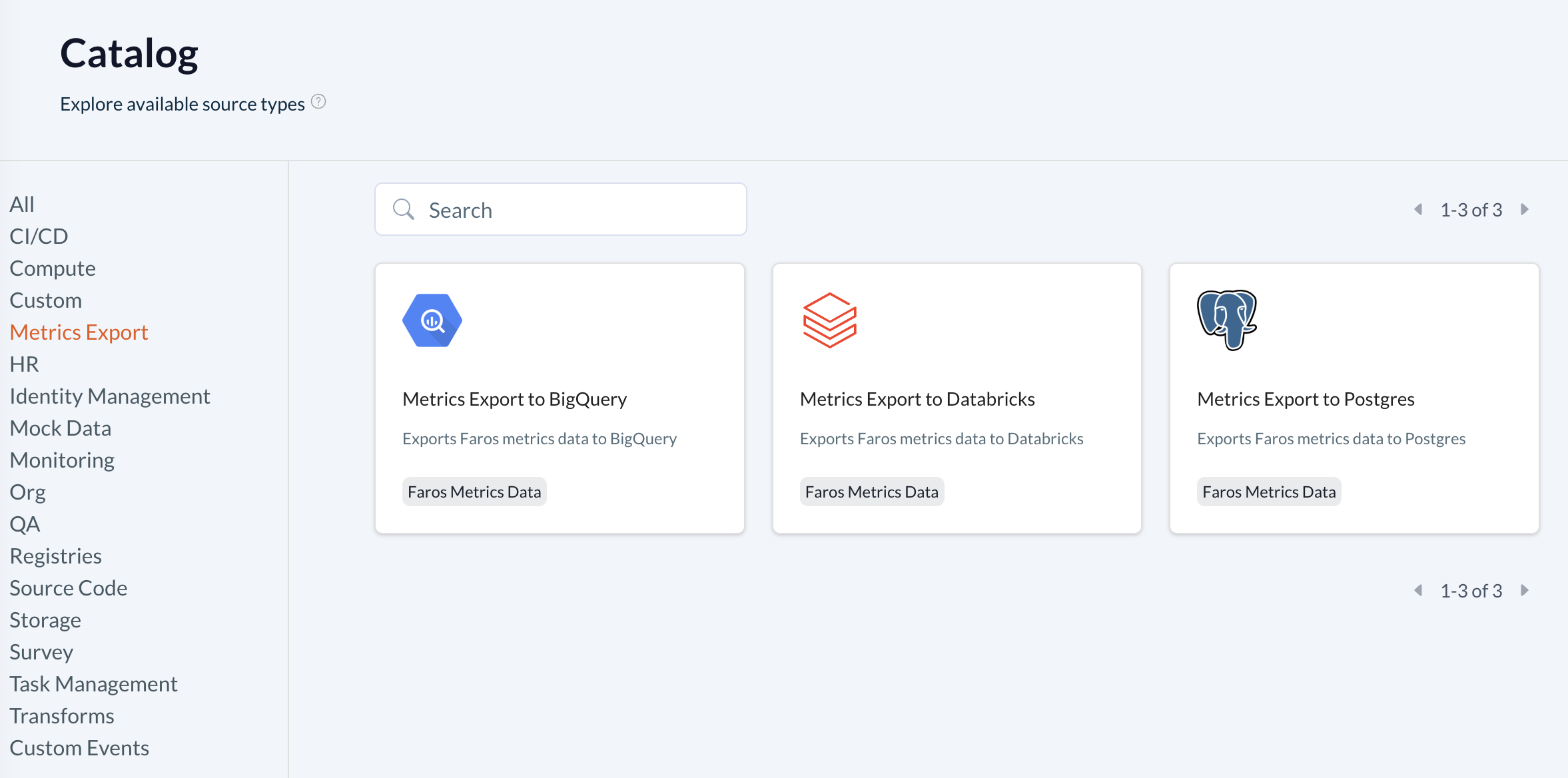

Click on +New source

Choose Metrics Export and click on Metrics Export to Databricks

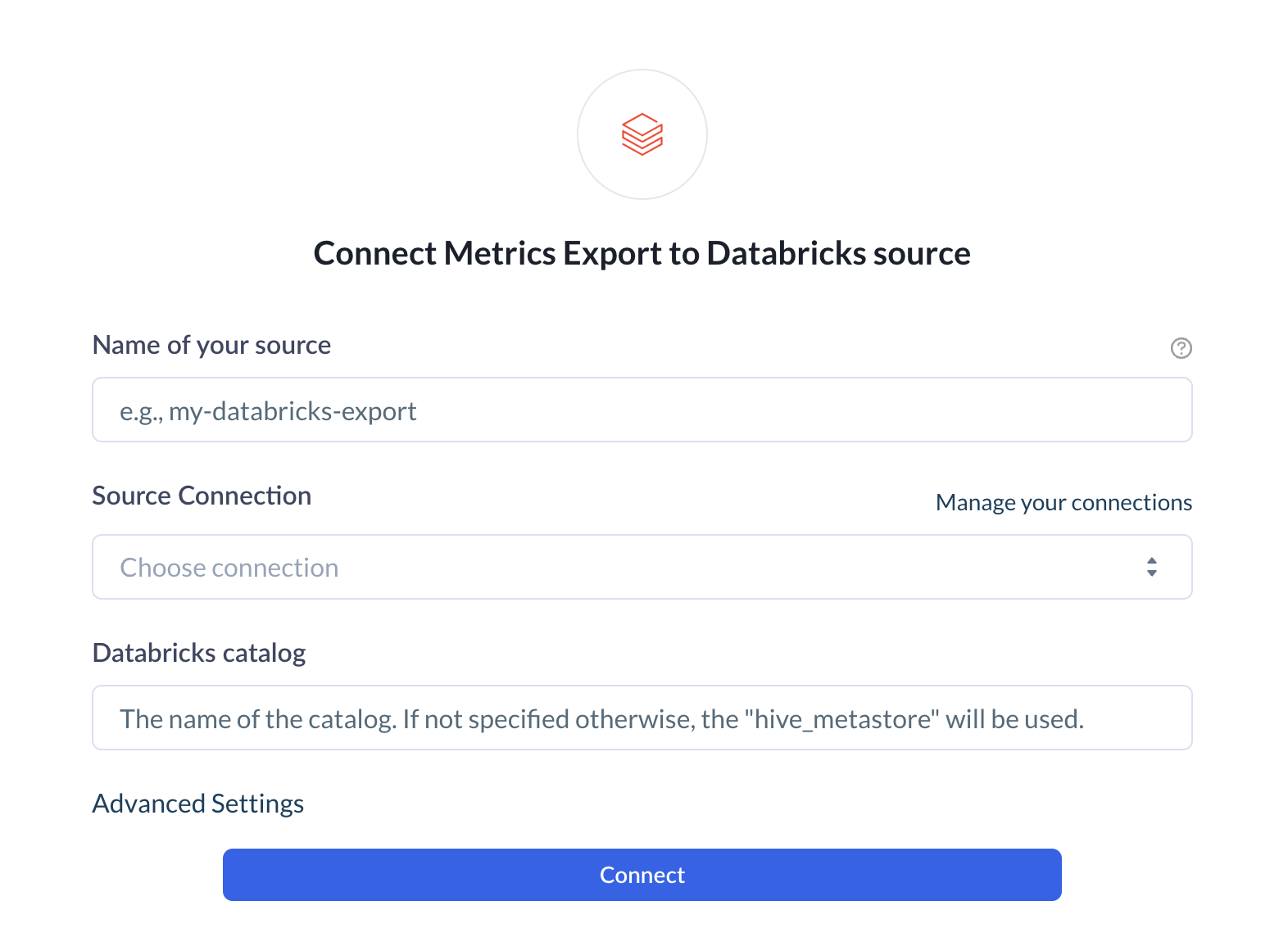

Fill in your source information

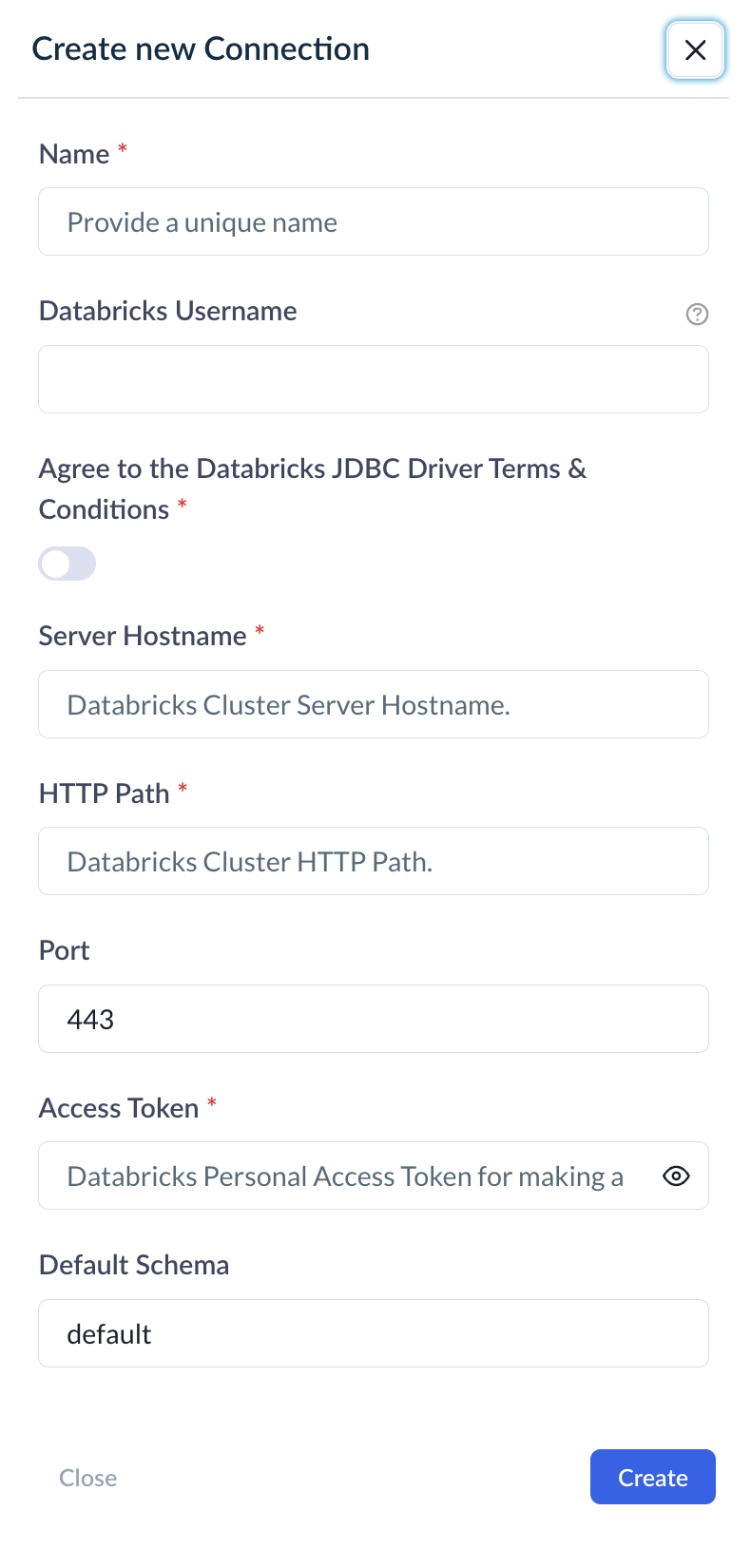

Create a new source connection

Use the values captured in Gather Databricks SQL Warehouse connection details and Create Databricks Token above to create your connector

- Server Hostname - Required. Example: abc-12345678-wxyz.cloud.databricks.com

- HTTP Path - Required. Example: sql/protocolvx/o/1234567489/0000-1111111-abcd90

- Port - Optional. Default to "443"

- Personal Access Token - Required. Example: dapi0123456789abcdefghij0123456789AB

- Databricks catalog - Optional. The name of the catalog. If not specified otherwise, the "hive_metastore" will be used

- Database schema - Optional. The default schema tables are written. If not specified otherwise, the "default" will be used

Updated 5 months ago