Copilot Causal Analysis

What is the true effect of AI generated code?

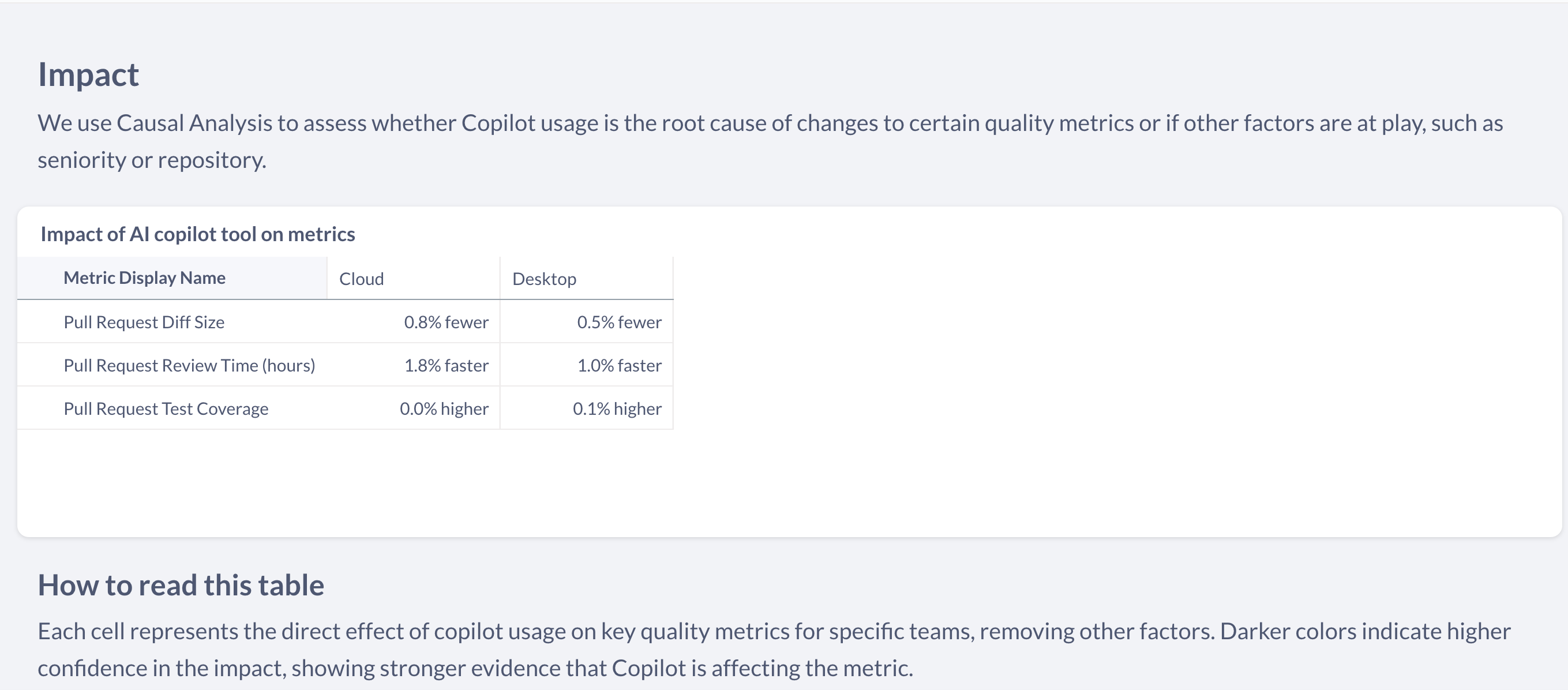

We compute a causal analysis of the effects of Copilot coding assistants on engineering metrics around pull request creation and quality. This approach leverages causal analysis (debiased or double machine learning) to uncover the true effects of Copilot on software engineering productivity metrics, beyond mere correlations. See our blog post for details on how the analysis works.

Table of Causal Insights on Copilot Metrics by Team

The table of causal insights is part of the Copilot Evaluation Module. It presents the Conditional Average Treatment Effect (CATE) for each Pull Request (PR), aggregated by team, to showcase the causal impacts of Copilot usage across different teams within an organization.

Table Components:

- Team Name: The specific team within the organization being analyzed.

- Copilot Usage Frequency: The average number of times Copilot was accessed by the team during the PR development process.

- PR Approval Time: The effect of Copilot usage on the average time it takes for a PR to be approved, indicating speed enhancements or delays.

- PR Diff Size: Changes in PR size, showing whether Copilot influences the volume of code changes submitted.

- Code Coverage: The impact on test coverage levels, demonstrating Copilot's effect on maintaining or improving code validation practices.

- Code Smells: The effect on the presence of code smells, reflecting any potential decline or improvement in code quality due to Copilot.

This granular view empowers engineering leaders to tailor Copilot deployment and training efforts to maximize productivity gains, uphold code quality standards, and ensure thoughtful scaling across the organization. The raw data from the analysis can be found in the Causal Model and Causal Effects tables.

Updated 14 days ago